Farming seaweed using robots

Background

This project is a part of the RI SEAWEED project, which states that

Cultivation of the oceans is a key requirement for future sustainable and efficient utilisation of the ocean resources required to meet demands for food, feed, materials, and energy for a growing global population. Norway, with one of the world’s longest coastlines, can take a leading role. Recent work by SINTEF suggests that Norway has a massive potential to cultivate a wide variety of seaweeds and develop new bioeconomy.

In order to cultivate the oceans in an efficient manner, this project aims to use robots to monitor the growth of seaweed.

Scope

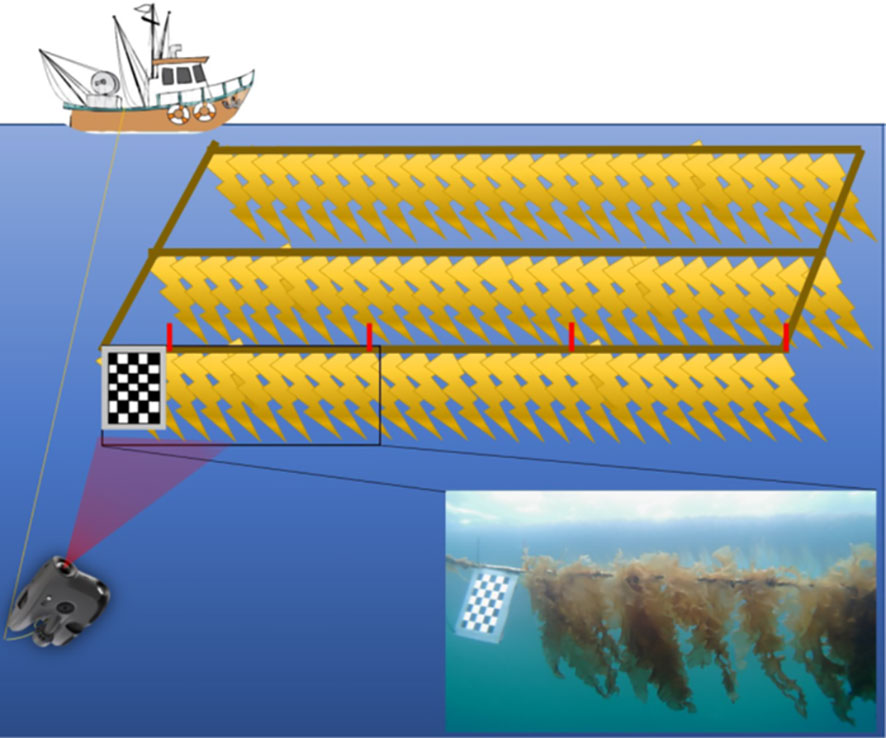

Farmed seaweed typically grows vertically, on long horizontal lines. A seaweed farm can consist of many lines, separated by around 10 meters, where each line spans several hundred meters. The scope of this project is to use robots, such as an autonomous surface vehicle, to assess the quantity and quality of the seaweed in a farm. The project is at an early stage, where we are investigating different options, so there will be room for testing out various topics in simulation, to arrive at the most promising concept.

A promising technology for assessing quality the seaweed is underwater hyperspectral imaging (UHI), which can “see” hundreds of colors (compared to only three colors in an RGB camera, and in human eyes). This makes it possible to detect smaller variations in the health of the seaweed, and separate the seaweed from any biofouling organisms that grow on the seaweed. However, there is no free lunch: UHI cameras are typically in a pushbroom configuration that take “1D pictures” that needs to be assembled to a 2D image by considering the attitude of the camera while the picture was taken. This is known as geo-referencing, and requires accurate knowledge of the position, attitude and timing of the camera. The motion of the seaweed further complicates the geo-referencing. As an alternative, one may sacrifice some spectral resolution by opting for a multispectral camera instead (~10 frequencies, compared to several hundreds for hyperspectral), which take 2D pictures that are easier to interpret.

| Farmed seaweed, source | Seaweed farm, Overrein et al. (2024) |

|---|---|

|

|

Proposed tasks

The tasks will vary based on the focus of the project, as decided by the student (you) and the supervisor (me), but the below steps are common:

- Familiarize with the state-of-the-art within seaweed farming, both from the industry and litterature

- Propose and discuss various concepts for assessing the quality and quantity of seaweed in a farm

- Set up a simulation environment for simulating a seaweed farm, a robot/autonomous vessel, and cameras

- Simulate promising concepts in the simulation environment to highlight their strengths and weaknesses

- Discuss the results with a critical eye, and conclude the work in a written report

The work will continue in a master thesis for the spring semester, where field trials could be an option.

Prerequisites

The following list of competences are meant to indicate the direction of the project, more so than being requirements. No candidate is expected to be an expert in all these domains, but the background and interest of the candidate will help determine the focus of the project.

- guidance, navigation, control and simulation of robots, particularly autonomous surface vessels

- robotic/computer vision, geo-referencing

- ROS or other robotic middleware

- Gazebo, Unity, Godot or other simulation engines

- hyperspectral/multispectral imaging

Contact

Contact supervisor Kristoffer Gryte, or co-supervisor Morten Alver

References

Overrein, Martin Molberg, Phil Tinn, David Aldridge, Geir Johnsen, and Glaucia M Fragoso. 2024. “Biomass Estimations of Cultivated Kelp Using Underwater RGB Images from a mini-ROV and Computer Vision Approaches.” Frontiers in Marine Science 11: 1324075.