Underwater-Image-Enhancement for biomass estimation in seaweed cultivation

Background

This project is a part of the RI SEAWEED project, which states that

Cultivation of the oceans is a key requirement for future sustainable and efficient utilisation of the ocean resources required to meet demands for food, feed, materials, and energy for a growing global population. Norway, with one of the world’s longest coastlines, can take a leading role. Recent work by SINTEF suggests that Norway has a massive potential to cultivate a wide variety of seaweeds and develop new bioeconomy.

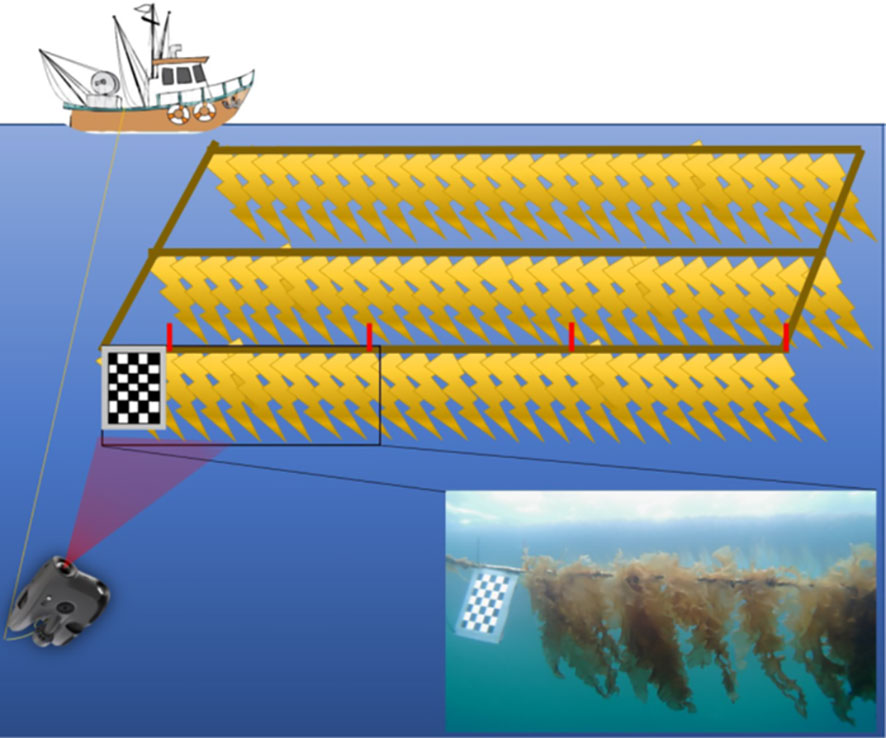

In order to cultivate the oceans in an efficient manner, this project aims to use robots to monitor the growth of seaweed.

By combining AI-powered computer vision with autonomous robotics, this project addresses both climate challenges and the future of sustainable food production.

| Farmed seaweed, source | Seaweed farm, Overrein et al. (2024) |

|---|---|

|

|

Scope

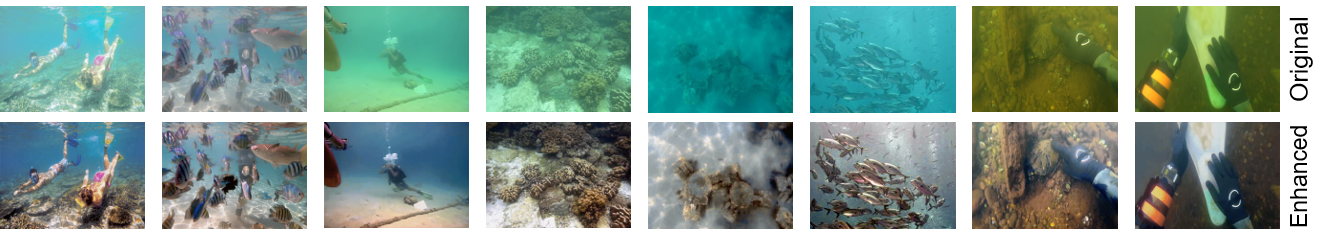

There are several challenges with using cameras under water, such as light attenuation, color distortion, and scattering from particles in the water, making the images blurry with low signal to noise. These challenges make it difficult to obtain high-quality images for assessing seaweed health and growth. These factors also vary greatly with the season and environment (light and amount of particles in the water), making it difficult to compare data from different locations and times of the year, and essentially limiting the usability of camera data for biomass estimation in seaweed farms.

Fortunately, new results within underwater image enhancement, using both deep learning and more classical techniques, have shown promising results in improving the quality of underwater images. These techniques can help mitigate the effects of light attenuation and scattering, leading to clearer and more detailed images that are better suited for analysis.

| Example underwater image enhancement, source |

|---|

|

Proposed tasks

The tasks will vary based on the focus of the project, as decided by the student (you) and the supervisor (me), but the below steps are common:

- Familiarize with the state-of-the-art within seaweed farming, both from the industry and litterature

- Perform a literature review of the state-of-the-art methods within underwater image enhancement, see e.g. CXH-Research/Underwater-Image-Enhancement: Summary of Publicly Available Underwater Image Enhancement Method, fansuregrin/Awesome-UIE: Awesome Underwater Image Enhancement (UIE) Methods, and YuZhao1999/UIE: This is a resouce list for underwater image enhancement

- Compare the performance, computational load and payload requirements of the most promising methods using an existing dataset. The goal is to reduce the impact of seasonal variations of existing seaweed biomass estimation methods Overrein et al. (2024).

- Exciting extensions could include:

- Train custom neural networks for biomass estimation

- Deploy models on edge devices for real-time processing

- Field trials with autonomous vessels in Norwegian fjords

- Discuss the results with a critical eye, and conclude the work in a written report

What you will learn

This project offers hands-on experience with cutting-edge technologies at the intersection of AI, robotics, and marine biology. Depending on your background and interests, you will develop skills in:

- Deep learning for computer vision: Work with state-of-the-art neural network architectures (CNNs, GANs, Transformers) for underwater image enhancement

- AI model evaluation and deployment: Compare different methods based on performance, computational efficiency, and real-world applicability

- Robotic vision systems: Apply computer vision techniques to solve real-world challenges in challenging underwater environments

- Autonomous systems: Potential exposure to autonomous surface vessels, sensor integration, and maritime robotics

- Research methodology: Literature review, experimental design, critical analysis, and scientific writing

No prior expertise in all areas is expected—your interests will help shape the project focus.

Contact

Contact supervisor Kristoffer Gryte, or co-supervisor Morten Alver

References

Overrein, Martin Molberg, Phil Tinn, David Aldridge, Geir Johnsen, and Glaucia M Fragoso. 2024. “Biomass Estimations of Cultivated Kelp Using Underwater RGB Images from a mini-ROV and Computer Vision Approaches.” Frontiers in Marine Science 11: 1324075.